In software development, things occasionally don’t go the way you expected them to: that’s not news to anyone who has tried to implement anything but the most trivial of applications.

You can pick up a task that looks like a small tweak to the front-end of a web application, then after a few hours of development you learn that you need to replace a Javascript library, which means you need a new build tool, which doesn’t have that custom plugin you were using for dealing with static files. Maybe you didn’t anticipate that your fancy new NoSQL database wouldn’t be able to scale up to meet the needs of that new customer you just signed. Suddenly, you are spending two weeks on a problem that you told a stakeholder would probably be done on a Friday afternoon.

As a team, there are two ways to manage this problem: you can treat them like car crashes, or you can treat them like plane crashes. Both can work, but it’s a choice you should make with your eyes open.

Safety Models

When an aeroplane crashes it is a big deal. We don’t expect it to happen, and so when it does it becomes a top priority to locate the cause and ensure that systems are changed so that it cannot happen again, even if that comes at great expense.

Recently, the entire global fleet of the Boeing 737 MAX was grounded after two similar high profile failures. Returning the aircraft to service will require a thorough investigation, mitigation strategies, and sign off from the Federal Aviation Administration. In short, nobody expected this failure to happen, and everybody expects all parties to be diligent enough to ensure that it never happens again.

This investigation is not confined to the aircraft themselves. Instead, we think about whole systems to identify and correct problems. Every aspect of the system is up for scrutinisation: pilot training, maintenance protocols, passenger behaviour, weather conditions, etc.

Contrast this to a car crash. We expect these to happen. There are around 1,800 fatalities from car crashes per year in the UK, and we expect that to continue. While models of car are sometimes recalled for safety reasons, we rarely apply the same level of systems thinking to road travel as a whole. A car crash is unlikely to force a change to driving tests, road design, availability of cars, etc. It would take a truly enormous number of crashes before system-wide changes were made.

These offer two contrasting approaches to getting things wrong. Are your mistakes more like car crashes or plane crashes?

Blame won’t get you anywhere

It’s important to remember that this whole process will be severely limited in its ability to teach you anything if you are working in an environment that blames individuals, and not systems, for mistakes. If you’re in the habit of firing junior team members, passing on blame to other teams, or making excuses to keep a clean performance record, then stop reading, focus on that problem, and come back later.

Both of these models assume that you have built a culture where your teams have the safety and integrity to retrospectively acknowledge mistakes, accept collective responsibility, and move forward productively.

The Car Crash Model

If you haven’t given it any thought, this is probably the model you are using by default. That might be fine, it might work for your team. In short, this is the model where you:

- Acknowledge that we occasionally make mistakes, sometimes expensive mistakes

- Predict that the cost of occasionally messing up in the exact same way is less than the cost of systemic fixes

- Measure the negative impact on your velocity and accept it as a constant

It isn’t the same as expecting everything to be perfect all the time and going into panic when it isn’t, that is unlikely to work for anyone. It is, for example, learning that your overly complex data model is costing you 50 hours per month in unexpected extra development effort, but agreeing that it is an acceptable price and moving on.

The Plane Crash Model

This is the model where we look to apply some amount of systems thinking as a matter of routine. That is, if a mistake hits a certain set of criteria, we will automatically resolve to see if there are system-wide corrections we can take to stop this category of error from happening again.

Of course, we still need to allow some contingency time for new mistakes, a small amount of time to conduct investigations, and a velocity impact if we want to take an Andon approach. However, we predict that the investment into fixing systems will be less than the cost of repeatedly making a mistake.

Why not both?

In reality, we are going to treat our mistakes both ways. That is, there will be some mistakes that strongly feel like they are just part of the job. Unlike a pilot, part of our job is combining technologies in new and interesting ways, and there will inevitably be some stumbles upon the way. There will also, however, be some mistakes where we think there is probably a critical lesson to be learned.

It’s important to agree how you will select which mistakes represent critical lessons for the team. The criteria for what counts as a critical mistake is going to be highly contextual. Here are a few that I’ve seen work in practice:

- In a time critical project where you are counting on consistently delivering according to some deadlines that are difficult to move, and where you were counting on consistent-ish sizing of tasks, it might make sense to choose any task that takes longer than a certain % over the average. For example, if you had 20 tasks that you expect to take roughly the same amount of time, most of them take between 3 and 6 working days, but 2 of them took 15 working days, those two could be good candidates.

- You could select tasks that unexpectedly required changing the tech stack: introducing a new library, replacing a third party service, etc. If you predicted that you could just add another CSS file but ended up having to introduce Sass and rewriting large amounts of styling, that could be a good candidate.

- If your tooling is able to support it, you might check for tasks that created a subsequent need to do some level of extra work because they introduced defects.

- You could select all tasks where extra work was identified at a late stage in your development cycle, such as at the user review stage.

A simple fact finding ceremony

A useful format I like to use is to spend 5 minutes answering each of three questions. This format is a fact-finding format, it isn’t supposed to generate solutions. The reason for this is that it’s easiest to find facts with a small number of people present — the people involved in the task, and a facilitator — but easier to make consensual decisions in a wider team format, such as a retrospective.

Each question represents one section of the ceremony. Keep it brief, we aren’t here to agonise over mistakes, we want to acknowledge them and move on. The longer you spend dwelling, the more likely you are to start beating yourself up over things you couldn’t have predicted. Each section should last 5 minutes, giving an overall ceremony length of 15 minutes plus some time to give instructions at each stage.

- What happened? [5 minutes]

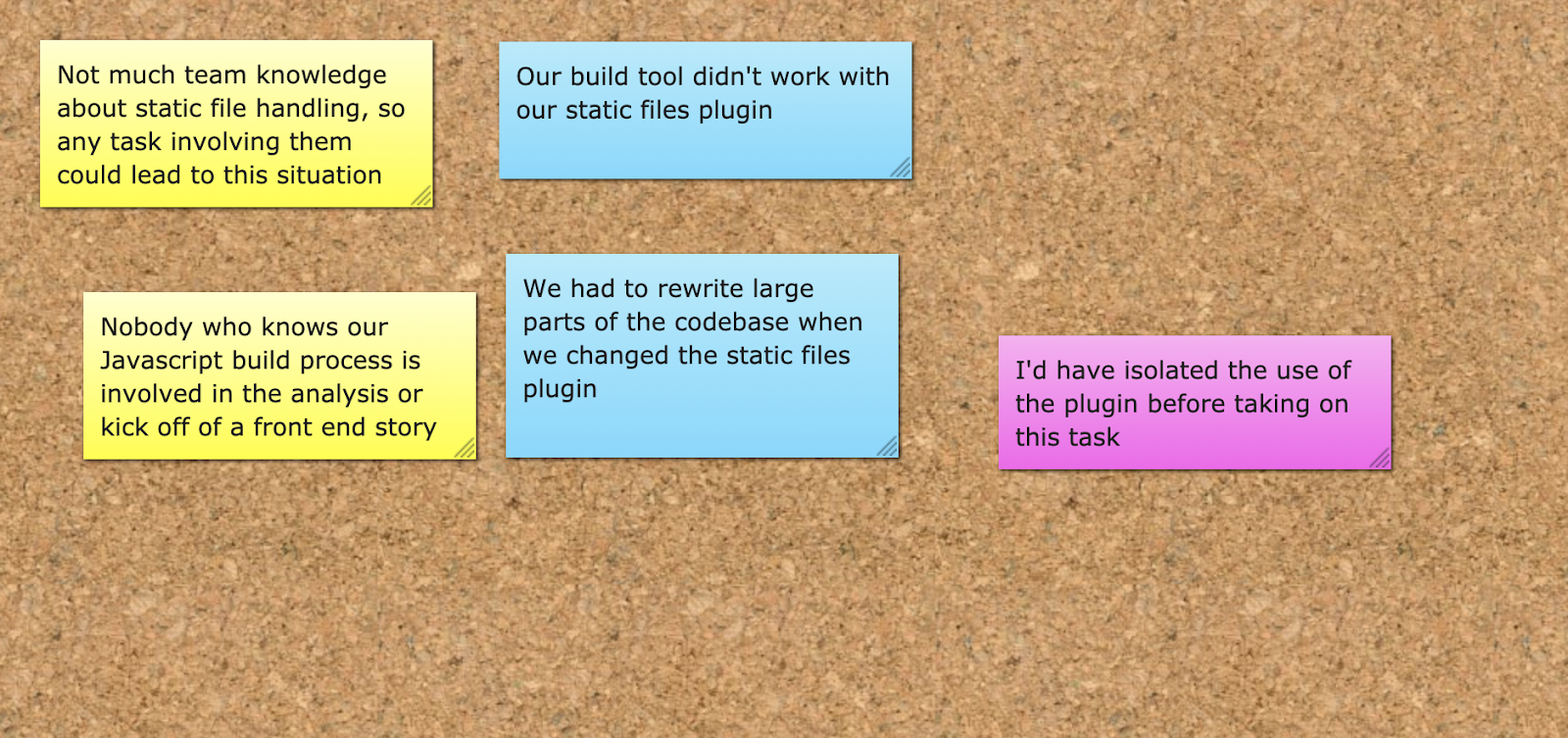

At this stage, the facilitator explains that we are only interested in dealing in facts: not opinions or hypothetical alternatives. “Our build tool isn’t compatible with our Node plugin” is a fact, but “We picked the wrong build tool” or “We shouldn’t be using that Node plugin” is not.

Let participants spend around 3 minutes writing down objective facts on blue sticky notes, one note per fact. Arrange them in a vertical line down the middle of a whiteboard, then spend two minutes sharing the output.

Be strict on what counts as a fact at this stage. If in doubt, give it back to the person that wrote it. There will be plenty of space to express opinions, but you want everyone to agree on what is indisputably and unanimously true before exploring them. - If we knew what we know now, what would we have done differently? [5 minutes]

You don’t want to end up with more points than you can discuss here. Let participants add pink sticky note one at a time that says what they wish they’d have done differently, and ask them to spend about 30 seconds explaining it. Try to limit follow up questions if those questions are actually different opinions disguised as questions – it’s OK to generate contradictory ideas here.

Arrange the pink sticky notes in a vertical line to the right of the blue ones. If they closely relate to one of the blue notes, keep them physically close on the board. - How can we detect if this is about to happen again? [5 minutes]

Allow participants 5 minutes to write ways of detecting this category of error on yellow sticky notes. We want to cover as much ground as possible here, so make sure they are added to the left of the blue notes as soon as they are written so participants know what hasn’t yet been covered.

Again, where possible yellow notes should be placed closest to the cluster of pink and blue notes that they relate to.

The only action item generated by this meeting should be to share the output with the rest of the team so that they have the context to propose a change if they feel it’s appropriate. This could be sharing a photo of the sticky notes produced, or a brief update after a daily stand-up.

Don’t ignore mistakes

Your planning process should explicitly acknowledge that the team will get things wrong. You can deal with these like car crashes: frequent, expected, accepted; or you can treat them like plane crashes: exceptional, studied as a system, and leading to process improvements.

What does this process look like on your team? Continue the conversation on Twitter.

About the Author

Lead Engineer at Made Tech